Contextual Document Assistant

Overview

Tech stack: Python, Google Collab, Hugging Faces, Radio

This system is designed to answer user questions based on the content of uploaded documents. It works by dividing the document into smaller parts, identifying the most relevant fragments for a given question, and then generating a clear and accurate response using that information. The user interacts through a simple interface where they can upload documents and ask questions in natural language. The system ensures that the answers are context-based and grounded in the content of the documents provided.

My contribution

The team

Authors: Ismael Meijide Giuliano Bardecio Joaquín Abeiro

Year

2025

Process

Table of Contents

- Introduction

- Development Process

- Challenges Encountered During System Construction

- Implemented Strategies

- System Results and Testing

- Embedding and Chunking Tests

- LLM Tests

Introduction

To implement the system required in the project, we defined the following technical requirements:

- Implemented in Python using a Google Colab notebook.

- Universal Sentence Encoder (USE) was used for embedding generation.

- Elasticsearch was used to store these embeddings in a vector database.

- Documents were chunked based on token count.

- LLM LLaMA-3.3-70B-Instruct-Turbo was selected to generate queries and formulate responses.

Development Process

To achieve the project objectives, we followed the steps below to fulfill all technical and optional requirements:

Document Chunking:

To store documents efficiently in the vector database, they must be split into smaller chunks, as most models have token processing limits. Large documents exceed this limit and must be divided. Additionally, chunking allows precise semantic search of specific parts.

- Token-based Chunking: Defines a max token count per chunk and an overlap parameter for redundancy between chunks.

- Paragraph-based Chunking: Less reliable if paragraphs are inconsistently formatted or vary greatly in size.

- Future improvements may include LangChain or LlamaIndex for smarter chunking.

Our implementation splits text into semantically consistent chunks using sentence parsing and max word thresholds.

Embedding Generation:

We compared models like "all-MiniLM-L6-v2", "Universal Sentence Encoder", "Word2Vec", and "Mistral-7B-v0.1". Based on cosine similarity tests, USE was chosen for its semantic accuracy and efficiency.

Each chunk was stored as a tuple {chunk, embedding} for easy retrieval.

Vector Storage:

Elasticsearch was selected as the vector search engine for its flexibility, reliability, and ease of integration. It supports storing large documents and performing semantic queries effectively.

Index Creation:

Each embedding-chunk pair was inserted into an Elasticsearch index. We tested various configurations for similarity search (dot product, cosine, Euclidean) and optimized "m" parameter for node connections. Best-performing configurations were used.

Chunk Indexing:

Chunks were indexed with their original text and a document ID, allowing retrieval of relevant sections from specific documents. tqdm was used for progress feedback.

Semantic Search:

To summarize documents based on user input:

- User input is improved using LLM to create a more effective query.

- The improved query is embedded with USE.

- Elasticsearch uses KNN to find the most relevant chunks.

Example:

Input: "What is the impact of human influence on ecosystems?"

Improved: "What are the effects of human activity on biodiversity and the balance of natural ecosystems?"

ChatGPT confirmed this improvement offered more specificity, better semantic structure, and scientific alignment.

Vector DB Query:

We use the generated embedding vector to query Elasticsearch. It returns the top 5 semantically similar chunks (k=5, num_candidates=10). These are used to build the final prompt.

Final Response Generation:

Using LLaMA 3.3 70B Instruct Turbo, the system:

- Accepts a user question.

- Rewrites it as a clear query.

- Retrieves relevant chunks using semantic search.

- Builds a context-rich prompt.

- Sends the prompt to the LLM.

- Displays a complete, evidence-based answer.

The system produced accurate, professional answers while preserving transparency.

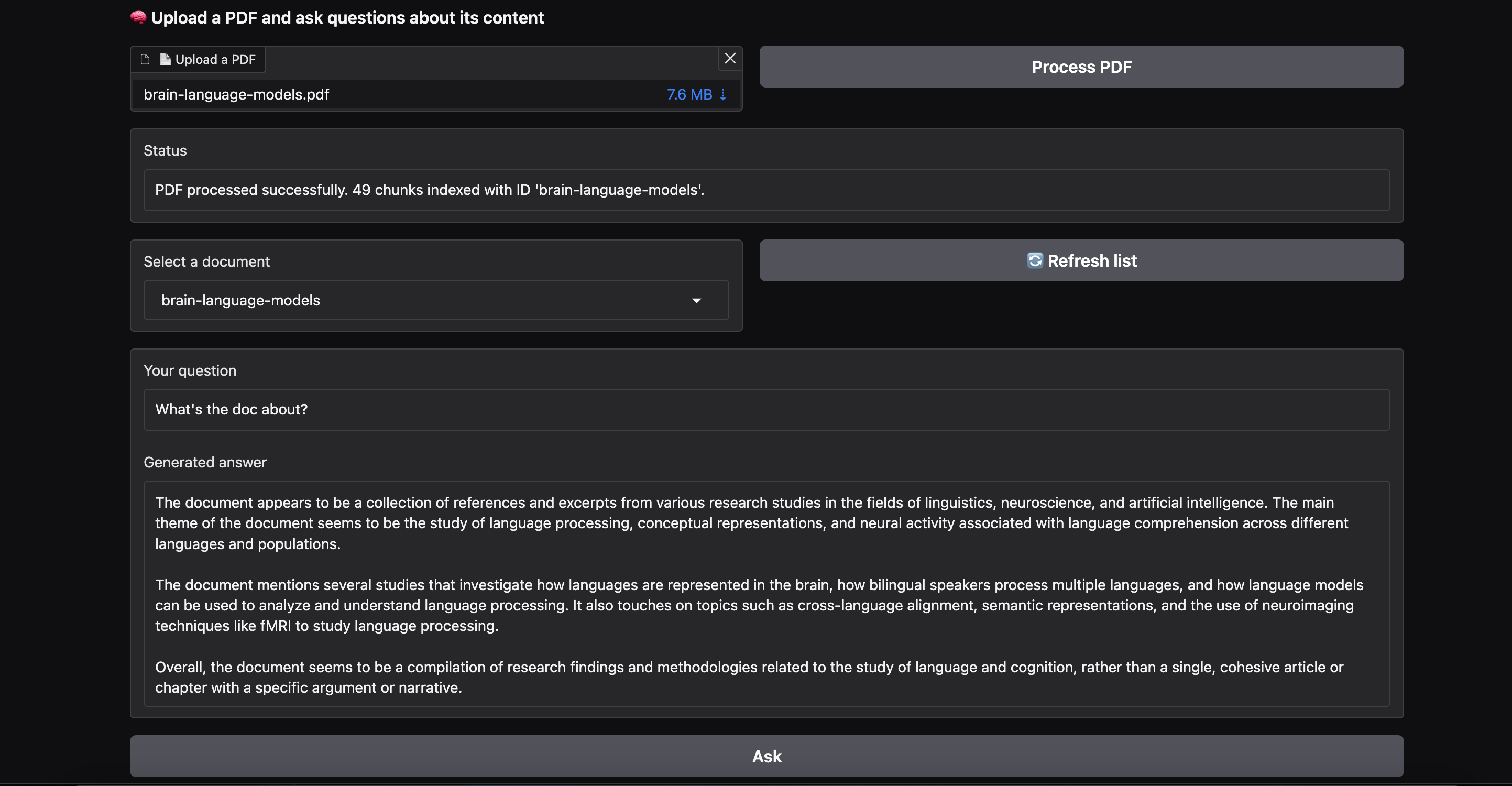

Gradio Interface Implementation:

To improve usability, we built a UI using Gradio. Initially, it accepted only text input. Later, PDF upload was enabled. Text was extracted using PyMuPDF, processed with spaCy, chunked, embedded, and indexed.

All components were integrated into the Gradio UI. Users can upload PDFs, view progress, ask questions, and get answers. Errors are handled gracefully.

Some subprocess errors occurred on package installation (normal in Colab), which can be fixed by reinstalling Together and Elasticsearch packages.

Challenges Encountered

- Model selection: We had to choose suitable models for both text generation and embedding creation under Colab's resource constraints. LLaMA 3.3 was chosen for remote inference capability.

- Embedding model evaluation: USE was chosen over MiniLM and others for its balance of semantic precision and integration simplicity.

- PDF text cleaning: Some documents had formatting issues; we had to clean and normalize the text for reliable chunking and embedding.

Implemented Strategies

- Query rewriting: User queries are rewritten by LLaMA 3.3 to be clearer and more information-retrieval friendly.

- Semantic consistency: Using the same embedding model for both documents and user input ensures search consistency.

- Chunk selection: KNN with

k=5,num_candidates=10is used to retrieve relevant document chunks. - Contextual prompt: The prompt includes both context and user question. If context is insufficient, the model is instructed to say so to avoid hallucinations.

System Results and Testing

Embedding & Chunking Tests:

MiniLM showed slightly higher average similarity (0.4407 vs 0.4232 for USE), but USE had easier integration with TensorFlow Hub, efficient batch processing, and reliable results. We decided to use USE for practicality.

LLM Tests:

The model generated coherent, professional summaries based on retrieved chunks. For out-of-context queries (e.g., "Things to do in New York"), it correctly identified a lack of relevant information.

The system effectively implemented the RAG pipeline: chunking, embedding, semantic search, and response generation. The chosen architecture and models were validated.

Try the demo here: https://huggingface.co/spaces/Chanito/RAG-System-Test